Features vs. Requirements - Threading

Sun, Nov 22, 2015 ❝... on the difference between a feature and a requirement❞Contents

Initially, a program was built up of statements that pause execution at a statement until the statement was completely executed. This is called a synchronous calling. This means that if you issue a statement for copying a file, the program would not continue with the next statement until the complete file was copied. As you can imagine, this interferes with the need to be available as soon as a copy action is initiated in case the user issues another command. The next command would only be executed after the file was completely copied, thus the statement finished. You are also not able to do progress updates or update your program’s window without interrupting the copy process in some way. So you can imagine that this is very inconvenient and severely degrades the user experience. This is where asynchrony and non-blocking semantics come into play. This article is on the subject of asynchronous execution and thread-safety. The next article looks at the notions of (non-)blocking semantics.

In many cases multi-threading is necessary. With the use of multi-threading comes the risk of thread-safety issues in data structures. Though it is not hard to understand that something bad may happen if memory is changed by multiple threads simultaneously, it is quite hard to reason about all the different situations that may occur and how that may manifest itself when a library is used in the wrong way. That is where thread-safety comes in. One can be super safe and implement everything thread-safely, but that comes at a cost too. The second part of this article is about thread-safety in data structures.

Managing multi-threading

Asynchronous execution is a mechanism to execute something in a way that you do not have to wait for it to complete before control is returned to the caller. You start something “in the background”, i.e. in another thread, such that its execution does not take up the “attention” of the current thread. At a later point in time we reach back to a statement that we initiated earlier and get its processing result.

The control that you have retained can be used to handle other requests such as window redraws, user commands and progress updates. There are a number of manifestations of non-blocking semantics such as asynchronous execution using a “future” as a reference to the asynchronous operation and callback mechanisms that get called immediately after the asynchronous operation finishes. Not all of them require multiple threads of execution.

First, let’s look at some mechanisms for managing asynchronous execution. There are 2 well known mechanisms for “managing” asynchronous execution: futures and callback methods. Afterwards, we’ll look at a case where multi-threading is a valid application.

Multi-threading - futures, promises, and then … continuations

The first mechanism is that of Futures and Promises. Futures and promises are two halves of one solution. Conceptually, the idea behind futures and promises is to asynchronously start a computation and let the result come back to you when the calculation is done. In the mean time, your main thread is free to do whatever intermediate activity is suitable, e.g. keep a UI responsive or do/start other computations.

Futures and promises each provide one half of the solution. The promise is the write-only half that goes with the asynchronous thread performing the computation. Once the computation is complete, you write your answer to the promise, i.e. you’re fulfilling your promise to do this computation. Now, you might think: What happens when the computation fails? Languages that support exceptions typically allow you to set an exception instance in case of failure.

The future, on the other hand, is the read-only part of the solution that will at some point in the future contain the result of the asynchronously executing computation provided through the promise. (Though not immediately.) You acquire the future as a result of calling an asynchronous method and therefore the future will start out “empty”. To get to the answer, one calls get(). It will block from that point on until the answer is provided - via the corresponding promise, by the asynchronously executing code - and returns the result at that point. Or, in the case where the computation failed and an exception was set instead of a result, it will throw an exception. Alternatively, one can poll the future to check if an answer is available without blocking.

So far, so good. This mechanism provides both blocking and non-blocking options. Now imagine that the method was provided by a library. You get back a future and simply wait for the computation result. What if you want to add some extra work to your (asynchronous) execution? That is what is called a continuation.

It is actually not possible to get into the asynchronous thread. You never actually got control of its execution, since it was immediately executed asynchronously. So, how do you add the work? The most straight-forward way is to start another asynchronous method that starts by waiting on the computation result (blocking on get()) and once the result is available, do the extra work. You are basically chaining one asynchronous method after another. jQuery, among others, provides a then() method that does the chaining for you. Simply provide your to-be-asynchronously-executed method and it will be “chained” after the first. It will be called as soon as the original async execution finishes. For efficiency reasons, the same asynchronous thread will likely call the chained method, but this is not required.

Multi-threading - callbacks

Another mechanism for getting into control of asynchronously executing code is the famous callback method. A callback is a function or method that is provided to be called when asynchronous execution is finished. As the name suggests, it is a way to call back “home” to the original caller of the asynchronous method.

A small caveat for this mechanism is that the callback method is called from the thread of execution of the asynchronous method. In a multi-threaded context, we need to make sure that the callback is thread-safe. Even in a single-threaded context, it can be challenging to thoroughly understand at what times a callback may be invoked, i.e. what state the memory could possibly be in.

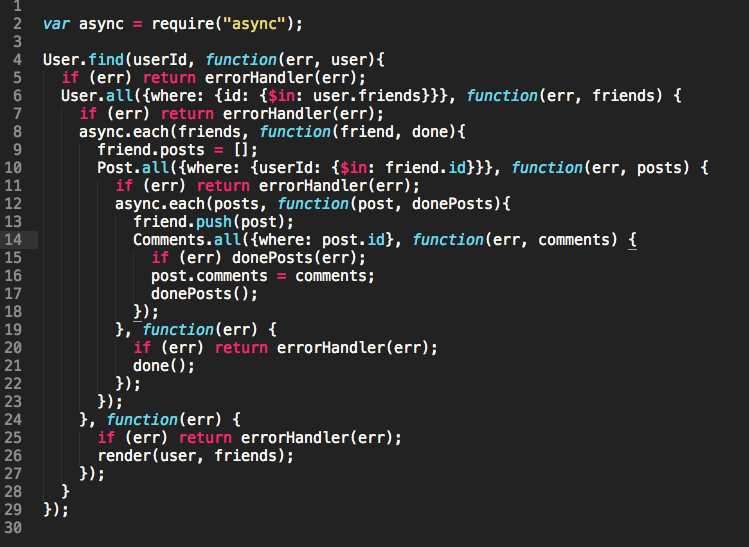

Callbacks are probably most famous for the (heavy) use in Javascript. It is not unheard of to find 7 or 8 levels of callbacks.

Don’t - simplicity for user and author

Essentially, of whether and when to use threads should be a choice made by the user. If threading is not for (purely) internal use, then it is not a choice for the library author. Forcing threading mechanisms onto the user makes for a more complex situation for several reasons. Firstly, we introduce multi-threading where it was not necessary. This means that the library author has to start managing multiple threads. Secondly, we force threading mechanisms onto the user even though we cannot be sure they are wanted. Thirdly, you get into this question of who ultimately “owns” (manages) the spawned thread, as we would not want to leave the thread unattended for.

It can be tricky to manage multiple threads and thread safety. There are some subtle issues that only ever grow the amount of overhead on each application and for an optimal implementation should be applied sparingly.

Furthermore, there is an indirect issue. In the case of asynchronous execution as an attempt to manage multiple threads: notice how we made a simple synchronous operation more complicated, twice, in order to (re)acquire synchronous calling semantics? Let me clarify this. To avoid blocking, the method is executed asynchronously. Now we want to perform subsequent actions, which would be easiest if the method would have executed synchronously. However, it isn’t, so we chain a new asynchronously executing method after the first. then() makes it look nice but it does not simplify the actual situation. In fact, the situation is at least as complicated as it was before, more complicated if you count for the newly added chained method. So we should only execute a method asynchronously, if we already know that nothing needs to be chained to it afterwards. The user should be in control of the moment when an operation is executed asynchronously, if at all.

As for callbacks. The mechanism of calling back as a way to provide a result after the computation is finished is tricky to use at best. You may end up at “the wrong time” meaning that the application is in an unanticipated state and you will end up in a different thread, meaning that you anything you touch in the callback implementation must be thread-safe.

Using callbacks one can accomplish whatever needs to be done. However, as you can see for example in the image above, there are drawbacks to this mechanism. When the requirements of the application get sufficiently complex, the construction becomes so complicated that it takes quite a bit of study to fully understand whether all the details of the written implementation match your expectations and the envisioned solution. This becomes especially relevant when many libraries that you use all provide this mechanism and deep nesting becomes unavoidable. With every level of callbacks it gets harder to reason about every possible permutation of potential callback events.

Multi-threading - library speed-up

There are valid cases for leveraging multi-threading. An example of this is internally applying threads: to run a number of operations in parallel as to speed up processing or to select from a number of alternative results. The distinguishing characteristic for this is that these threads are fully internalized in the library and not exposed to the user. The user-facing methods all still have synchronous calling semantics, i.e. blocking behavior.

Thread-safety

A similar case to managing multiple threads can be made for managing thread-safety. In this case we are referring to properties of data structures and algorithms. We need to make a distinction here, as there are cases to be made for both sides. Properties that are undesirable in the normal case, may be a prime feature in the special case. This is true in case of thread-safety.

Thread-safety is not a first-class property

Thread-safety is an example of a property that is useful in certain use cases, those were multi-threading is required and hence thread-safety is a necessity. However, in a single threaded context the data structure or algorithm typically suffers penalties because of the added guarantee of thread-safety.

Don’t make your data structure thread-safe by default if there is a trade-off of any kind. A data structure’s original purpose is to structure data. The user of your data structure can make it thread-safe if it is required. On the other hand, if you make your data structure thread-safe at the cost of performance for a user that does not require thread-safety, then he will have used a suboptimal data structure because of an unused secondary property. Another possibility is the risk of lock contention if state is updated frequently and variables are kept in some thread-safe form such as atomic types.

One can make a nuance here, if one would create a data structure for the purpose of making it high performant specifically for multi-threaded use. However, that means that thread-safety is part of the data structure’s design, not something bolted on afterwards. You can identify a data structure that provides thread-safety as an honest first-class feature if the data structure is structured specifically for the purpose of multi-threading. If this is the case, there should also exist a data structure that provides almost exactly the same features - apart from thead-safety - that has a simpler design and implementation because thread-safety did not need to be implemented.

If thread-safety can be added on at a later time, then you should provide this as a separate component. An example of this is Java’s synchronizedList. At the same time, this shows you, by way of its implementation: a decorator, that thread-safety was never an integral part of the structure.

Thread-safety as first-class feature

When thread-safety is a first-class feature, then the remarks in the previous section, do not hold for the particular case. An example of a data structure created specifically for thread-safety in concurrent use is Java’s ConcurrentHashMap. It provides the same high-level features as the original hash map (or rather I should say Hashtable, as there are some minor differences with HashMap, such as use of null is not allowed for either key or value), however it is internally structured differently as to provide more granular locking. The benefit of this is improved performance in heavy concurrent use cases.

It is worthwhile to read a part of the general description of ConcurrentHashMap. You’ll notice that there are some very specific instructions on how this Map implementation works. This includes notes on caveats with regard to locking, specific requirements to values (null not allowed in either key or value), considerations for Iterator usage and aggregate functions and happens-before rules that hold with regard to parallel usage. This is a data structure where the whole implementation is centered around concurrent use and thread-safety. In this case, thread-safety clearly is a first-class citizen and many other properties suffer because of it. Trade-offs are made in favor of thread-safety.

Remember that the lock granularity should be strictly finer than a single all-encompassing lock. Having one big lock would mean that you can also go without a lock and instead only wrap the data structure if locking is required. In that case, locking is not a true first-class feature.

Don’t - unnecessary thread-safety

Thread-safety is certainly not necessary for all use cases. In almost all implementations, thread-safety will add overhead. An important indirect issue of this is with the use of composite data structures. If only the composition needs to be thread-safe, then it will need to provide thread-safety for its exact usage patterns only. Depending on what the composite implementation requires, data structures may not need to be made thread-safe at all or only for some very specific use cases. Using a thread-safe data structure in a composition may add overhead for all other cases that need not be thread-safe or where thread-safety is already managed by other parts of the composition.

disclaimer I am writing this from the perspective of high level application developer. For low level development directly to the hardware layer or embedded systems, there are different circumstances. For one, multi-threading may not be available and simply blocking may freeze up the whole system. Similarly, busy waiting (repeatedly checking a condition) could be faster in certain situations or it may be the only mechanism that is permitted. Certain regions in the Linux kernel, for example, do not permit blocking in order to avoid deadlocks. Furthermore, blocking semantics may not be available in the hardware.